It might seem like a rhetorical question, but we still wanted proof. People are deliberating about the possibilities of fake news being generated through AI, so we’re gonna check some stuff. First of all, we need to acknowledge the possible basic threats of generative AI and definitions related to fake news.

What really is fake news?

In the simplest words possible:

Fake news is information that is: not true, partially not true, reality-based (or not), that changes perceptions of the topic in a manner that was intended by its creator. It can be image, gossip, video, news, article, news article… etc

According to Wikipedia (short version):

“Fake news or hoax news is false or misleading information (hoaxes, propaganda, and disinformation) presented as news. Fake news often has the aim of damaging the reputation of a person or entity, or making money through advertising revenue. Although false news has always been spread throughout history, the term “fake news” was first used in the 1890s when sensational reports in newspapers were common.Nevertheless, the term does not have a fixed definition and has been applied broadly to any type of false information.”

What can we generate with AI to create fake news?

- Fake images

- Fake audio

- Generating ideas

- Generating stories

- Generating methods (not only fake info but also things related to weapons – instructions etc)

- Enhancing deep fake technology (but that’s another subject)

The whole AI boom focused basically on 2 tools Midjourney and ChatGPT, because of popularity, effects, and the lowest entry barrier. Due to that, we wanted to show you a bit, of possibilities, options, and processes to make you aware of and acknowledge the basic risks of these two options.

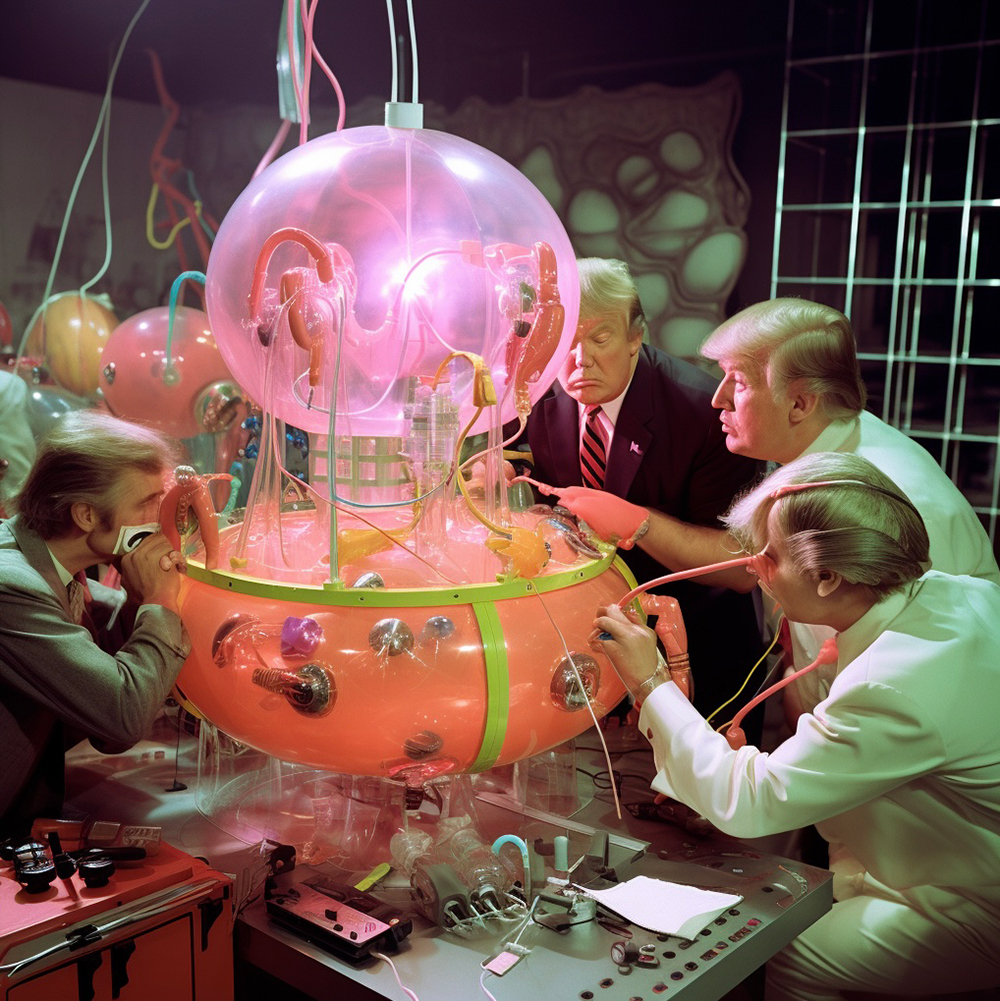

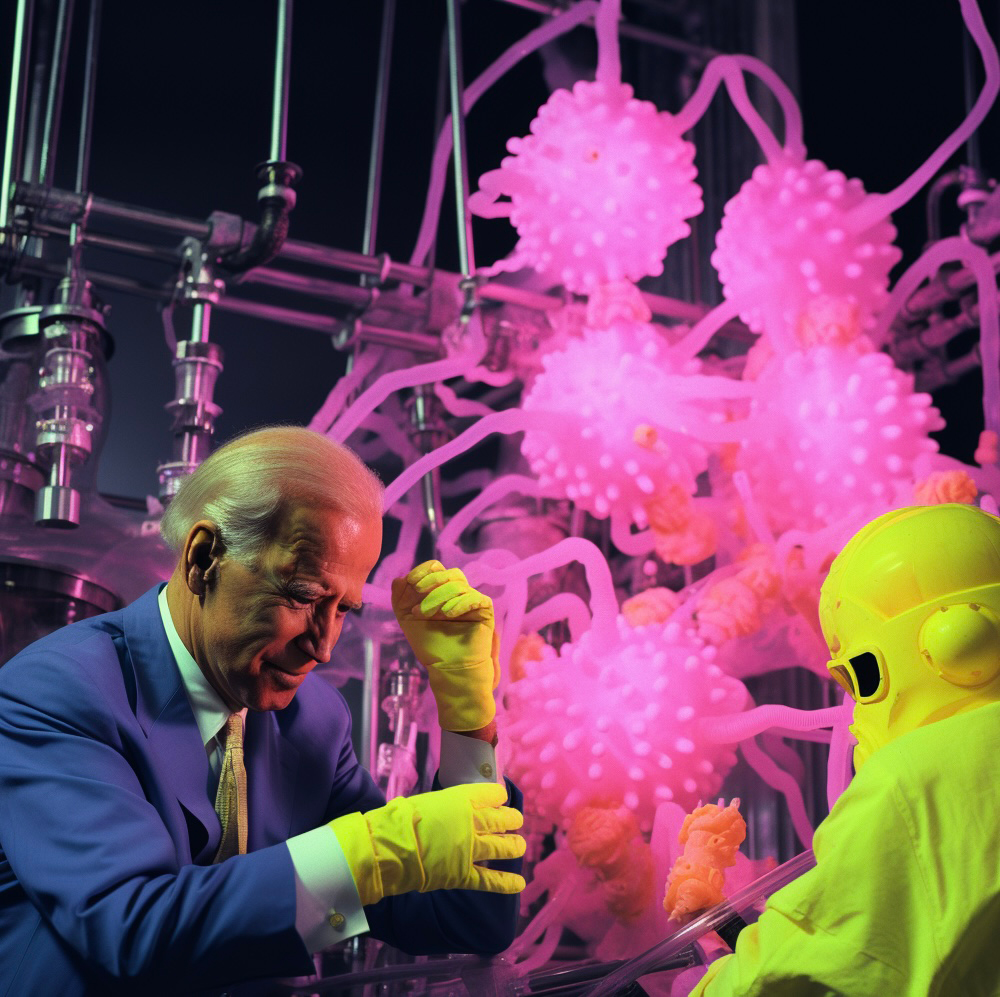

So, how easy is this? We set out to try and see just how easy it is to generate a random image related to politics/politicians. To be fair, we gave it a go with both president Joe Biden and ex-president Donald Trump.

We used the following prompt:

/imagine: retro photo of people dressed up as cyber technocrats creating new inflated president trump in crazy neon laboratory

prompt used:

/imagine: retro photo of people dressed up as cyber technocrats creating new inflated president biden in crazy laboratory

Pretty simple and fun? Only if you’re using the outcomes for entertainment, and not to start a world revolution. As you can see effects are a bit random: the age, the face, surroundings, but would you believe that President Biden was in a Russian glitter factory? It basically depends only on you or your vision 😉

The funny fact is that we did not use the “Putin” name or anything related to this figure, but the archetype of the word “Russia” brought him directly to our image.

Without the proper adjustment / surroundings it is still a random internet fantasy. Even your brain telling you it’s another effect of insta’s famous “see how Pope Francis would look skating” apps. To generate proper fake news you still need other apps or know more about generation and still use something else than those basics, if we are talking about rather normal, intelligent people.

So we can see that, in the case of visual generative AI, the main thing that has changed is the speed of creating “visually similar to the intended” effect generations of the content, but still sometimes with quality that is relatively easy to distinct from “original” photos and materials, of course for conscious recipient.

We already lived in a world filled with applications designed to facilitate processes and lower the entry barrier, so they also facilitate and speed up the processes of creating digital images. Even without AI, we are surrounded by applications that perfectly cut out images from the background in milliseconds, the new iOS system included it by default in your photo album a long time ago, and Appstore is filled with apps for photo manipulation.

In fact, I see greater threats in the text generation part, because stories have a greater impact on people. Somehow an image is worth more than a thousand words but still… the possibilities of creating fake news in this respect have not increased that much, faces still contain a random similarity, and there are also a million photos of politicians on the internet, which we can put together in interesting collages, but so far we have not had access to these mega-fast created stories, ideas generated based on other existing ones.

Not without reason marketing, whether political or otherwise, uses a well-built story, and creates its characters, to manage the beliefs, aspirations, and needs of its recipients.

In my opinion, the command “create a touching story of a girl harmed by… e.g. “such and such war conflict”, can create greater threats than generating a photo with the same assumption and parameters.

Why?

In the case of text generators, and stories, their connotations can create even more connections based on real events (books, articles, stories, history) that will be more difficult to distinguish from the very beginning because of its roots in reality and no visual representation to distinct from.

Take this example: “Generate a fictional story based on facts about… “ And we can make our wishes even more precise and refine them…

In my opinion, such a story will be easier to believe than randomly generated photos of a politician. The speed of both generations increases the risk, but a refined text version of the story at this speed, basically ready-made straight from the generator, seems to pose a much greater risk to me. The possibility of attacking a given topic/goal with fictional text articles seems to me to be more appealing to human consciousness to accept it as true. Of course, supported by an image, it will be dramatically more successful, but when it comes to comparison of these 2 ways of generating separately, the text version carries much greater risks for me in its raw form straight from the generator than generated images.

Still, we live in a society that has a hard time distinguishing reality from fiction based mainly on access to education and its level, not to mention the technical one. However, culturally, people are slowly becoming accustomed to the fact that an image can be “fabricated” for someone’s needs, but it is harder for us to understand that the whole chain of events, the entire history of a given unit was created only for manipulation.

BUT

Only one is perfect and basically leaving regular public with no chance – AI voice generators possibilities. You cannot distinct by normal hearing capabilities the difference. The only one difference would be words…

other fun examples